Carrier Arrestor System Maintenance Errors

Eye watering video has emerged of a mishap on the US aircraft carrier USS Dwight D. Eisenhower (CVN 69) in the Atlantic on 18 March 2016. The 1½-inch-thick steel arrestor wire failed during the landing of a Northrop Grumman E-2C Hawkeye and during the go-around the E-2C drops out of sight below the deck edge before climbing away. The investigation highlighted shortcomings in maintenance data.

Eight sailors on deck suffered a variety of injuries from the flailing cable, including a fractured skull, facial, ankle, wrist, pelvis and legs with one sailor receiving a possible traumatic brain injury. A Northrop Grumman C-2A Greyhound and a Sikorsky MH-60S Seahawk helicopter parked on deck also received about $82,000 in damage.

Safety Investigation

According to a US Navy investigation report obtained by The Virginian-Pilot through a Freedom of Information Act request:

… maintenance personnel missed at least one and possibly two “critical steps” while working on an engine that helps operate the carrier flight deck’s cables, which are called cross deck pendants, after a previous landing. As a result, the engine failed to slow the aircraft, instead causing the pendant to break “at or near” the Hawkeye’s tailhook. The report said that while there was a “lack of procedural compliance” while troubleshooting an error code from a previous arrested landing, “the sailors involved reasonably believed they had properly and conscientiously completed the complicated procedure.”

The maintenance personnel:

…were using an approved Navy procedure when they missed steps that led them to misprogram a valve that controls the gear engine’s pressure and energy absorption, according to the report. But that procedure lacked warnings, other notations and wasn’t “user friendly,” Navy investigators found. As a result, while those personnel failed to comply with a “technically correct written procedure,” the Navy found their error understandable because the procedure didn’t explain the basis for its steps, lacked supervisory controls and “failed to warn users of the critical nature” of the valve’s realignment. A command investigation into the incident included recommendations for the development of additional controls for troubleshooting the carrier’s aircraft recovery system as well as a review of the system’s procedures to add necessary warnings, cautions and quality assurance.

However, disappointingly, despite recognising the shortcomings in the maintenance data and that “the sailors involved reasonably believed they had properly and conscientiously completed the complicated procedure”:

It also included recommendations that Capt. Paul Spedero, commanding officer of the Ike, consider formal counseling, fitness evaluations, qualification removal, requalification or administrative actions for three others whose names were redacted.

Other Safety Resources

James Reason’s 12 Principles of Error Management

Aerossurance worked with the Flight Safety Foundation (FSF) to create a Maintenance Observation Program (MOP) requirement for their contractible BARSOHO offshore helicopter Safety Performance Requirements to help learning about routine maintenance and then to initiate safety improvements:  Aerossurance can provide practice guidance and specialist support to successfully implement a MOP.

Aerossurance can provide practice guidance and specialist support to successfully implement a MOP.

UPDATE 9 November 2016: USMC CH-53E Readiness Crisis and Mid Air Collision Catastrophe

UPDATE 8 February 2018: The UK Rail Safety and Standards Board (RSSB) say: Future safety requires new approaches to people development They say that in the future rail system “there will be more complexity with more interlinked systems working together”:

…the role of many of our staff will change dramatically. The railway system of the future will require different skills from our workforce. There are likely to be fewer roles that require repetitive procedure following and more that require dynamic decision making, collaborating, working with data or providing a personalised service to customers. A seminal white paper on safety in air traffic control acknowledges the increasing difficulty of managing safety with rule compliance as the system complexity grows: ‘The consequences are that predictability is limited during both design and operation, and that it is impossible precisely to prescribe or even describe how work should be done.’ Since human performance cannot be completely prescribed, some degree of variability, flexibility or adaptivity is required for these future systems to work.

They recommend:

- Invest in manager skills to build a trusting relationship at all levels.

- Explore ‘work as done’ with an open mind.

- Shift focus of development activities onto ‘how to make things go right’ not just ‘how to avoid things going wrong’.

- Harness the power of ‘experts’ to help develop newly competent people within the context of normal work.

- Recognise that workers may know more about what it takes for the system to work safety and efficiently than your trainers, and managers.

UPDATE 12 February 2018: Safety blunders expose lab staff to potentially lethal diseases in UK. Tim Trevan, a former UN weapons inspector who now runs Chrome Biosafety and Biosecurity Consulting in Maryland, said safety breaches are often wrongly explained away as human error.

Blaming it on human error doesn’t help you learn, it doesn’t help you improve. You have to look deeper and ask: ‘what are the environmental or cultural issues that are driving these things?’ There is nearly always something obvious that can be done to improve safety. One way to address issues in the lab is you don’t wait for things to go wrong in a major way: you look at the near-misses. You actively scan your work on a daily or weekly basis for things that didn’t turn out as expected. If you do that, you get a better understanding of how things can go wrong. Another approach is to ask people who are doing the work what is the most dangerous or difficult thing they do. Or what keeps them up at night. These are always good pointers to where, on a proactive basis, you should be addressing things that could go wrong.

UPDATE 13 February 2018: Considering human factors and developing systems-thinking behaviours to ensure patient safety

Medication errors are too frequently assigned as blame towards a single person. By considering these errors as a system-level failure, healthcare providers can take significant steps towards improving patient safety. ‘Systems thinking’ is a way of better understanding complex workplace issues; exploring relationships between system elements to inform efforts to improve; and realising that ‘cause and effect’ are not necessarily closely related in space or time. This approach does not come naturally and is neither well-defined nor routinely practised…. When under stress, the human psyche often reduces complex reality to linear cause-and-effect chains. Harm and safety are the results of complex systems, not single acts.

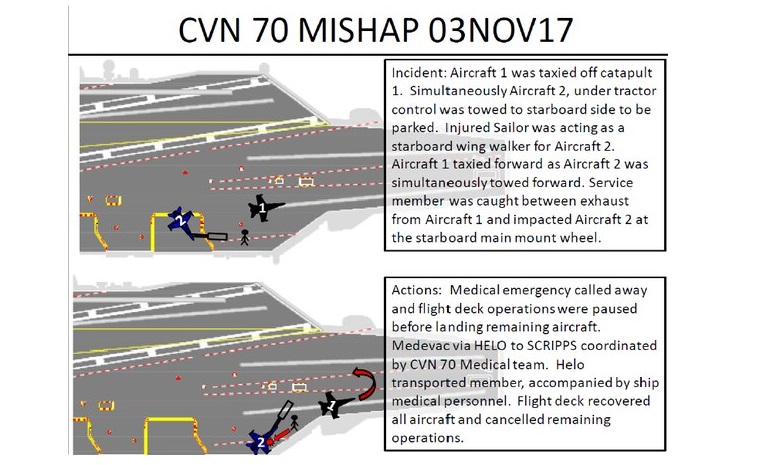

UPDATE 11 August 2019: A US Navy sailor was crushed to death when an SH-60 helicopter external fuel tank was accidentally jettisoned during a turnaround. In another case, a sailor was knocked over by jet blast on the deck of aircraft carrier USS Carl Vinson and then run over by another aircraft.

Aerossurance is pleased to be both sponsoring and presenting at a Royal Aeronautical Society (RAeS) Human Factors Group: Engineering seminar Maintenance Error: Are we learning? to be held on 9 May 2019 at Cranfield University.

Recent Comments