Back to the Future: Error Management

A year ago we published our article James Reason’s 12 Principles of Error Management. It set out set out the 12 systemic human factors centric principles of error management that James Reason, Professor Emeritus, University of Manchester defined in his book Managing Maintenance Error: A Practical Guide (co-written with Alan Hobbs and published in 2003).

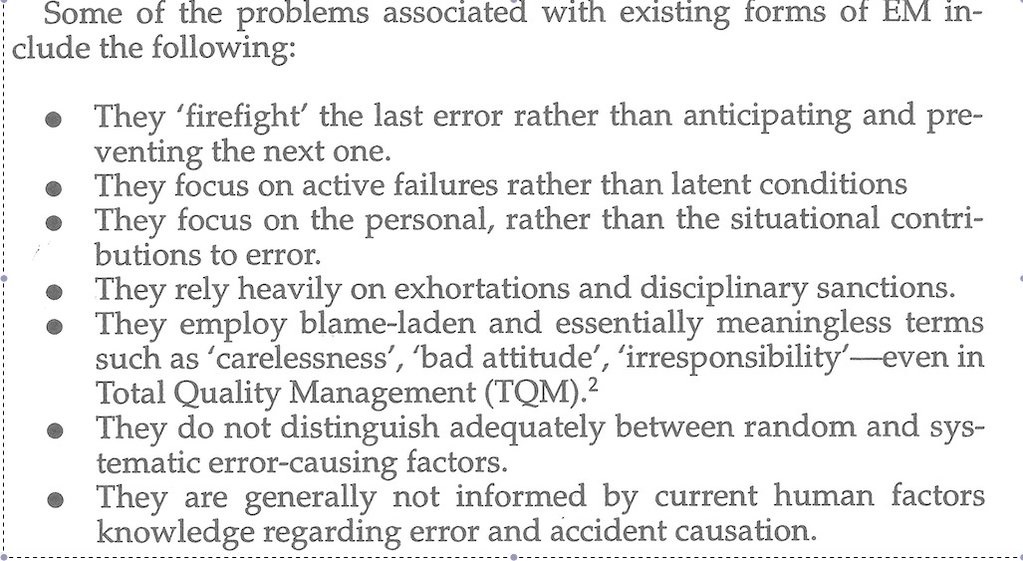

Recently we spotted a photograph of some text in a Tweet. It is from Reason’s earlier 1997 classic Managing the Risks of Organizational Accidents and was a timely reminder:

Recently we spotted a photograph of some text in a Tweet. It is from Reason’s earlier 1997 classic Managing the Risks of Organizational Accidents and was a timely reminder:  While mention of TQM looks rather dated now, sadly we do wonder how far we have collectively moved as an industry since then:

While mention of TQM looks rather dated now, sadly we do wonder how far we have collectively moved as an industry since then:

- How many organisations still await occurrence or hazard reports from the front-line rather than conducting active oversight or encouraging safety improvement action?

- How many organisations spend more time analysing individual behaviour after an occurrence to determine culpability than analysing the factors that affect individual performance before an occurrence?

We certainly see regular accidents reports that suggest we can do better:

- Fatal $16 Million Maintenance Errors

- Misassembled Anti-Torque Pedals Cause EC135 Accident

- The Missing Igniters: Fatigue & Management of Change Shortcomings

- A319 Double Cowling Loss and Fire – AAIB Report

- USAF RC-135V Rivet Joint Oxygen Fire

- Inadequately Secured Cargo Caused B747F Crash at Bagram, Afghanistan

- BA Changes Briefings, Simulator Training and Chart Provider After B747 Accident

- Gulfstream G-IV Take Off Accident & Human Factors

- Fatal G-IV Runway Excursion Accident in France – Lessons

- ‘Procedural Drift’: Lynx CFIT in Afghanistan

- Fatal Night-time UK AW139 Accident Highlights Business Aviation Safety Lessons

- Fatal Helicopter / Crane Collision – London Jan 2013

- Misloading Caused Fatal 2013 DHC-3 Accident

- Metro III Low-energy Rejected Landing and CFIT

- Operator & FAA Shortcomings in Alaskan B1900 Accident

- Culture + Non Compliance + Mechanical Failures = DC3 Accident

- Mid Air Collision Typhoon & Learjet 35

- Metro-North: Organisational Accidents

- DuPont Reputational Explosion

- Shell Moerdijk Explosion: “Failure to Learn”

Further, as a society, we still see human error being defined as a cause:

- Saarbrücken flight: Human error determined as cause of accident

- Two workers quizzed over ‘human error ‘ in Alton Towers horror

- U.S. general: Human error led to Doctors Without Borders strike

Of course we can take heart that many practitioners are making amazing strides in applying Reason’s 12 Principles, enhancing their organisation’s safety culture and looking at other ways to enhance human performance as we discussed here:

- Maintenance Human Factors: The Next Generation

- Aircraft Maintenance: Going for Gold?

- How To Develop Your Organisation’s Safety Culture

- The Power of Safety Leadership

A follow up to the original book, entitled Organizational Accidents Revisited, is due to be published by Ashgate in January 2016 on the topic of what it abbreviates to ‘orgax’. It is reported that:

Where the 1997 book focused largely upon the systemic factors underlying organizational accidents, this complementary follow-up goes beyond this to examine what can be done to improve the ‘error wisdom’ and risk awareness of those on the spot; they are often the last line of defence and so have the power to halt the accident trajectory before it can cause damage. The book concludes by advocating that system safety should require the integration of systemic factors (collective mindfulness) with individual mental skills (personal mindfulness). Contents:

- Introduction.

- Part 1 Refreshers: The ‘anatomy’ of an organizational accident; Error-enforcing conditions.

- Part 2 Additions Since 1997: Safety management systems; Resident pathogens; Ten case studies of organizational accidents; Foresight training; Alternative views; Retrospect and prospect; Taking stock; Heroic recoveries.

- Index

The 10 case studies are: Three from healthcare, two radiation releases, one rail accident, two hydrocarbon explosions and two air accidents.

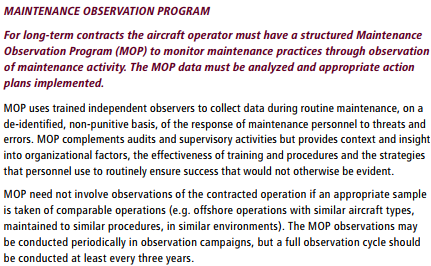

Aerossurance worked with the Flight Safety Foundation (FSF) to create a Maintenance Observation Program (MOP) requirement for their contractible BARSOHO offshore helicopter Safety Performance Requirements to help learning about routine maintenance and then to initiate safety improvements:

Aerossurance can provide practice guidance and specialist support to successfully implement a MOP.

Amy Edmonson discusses psychological safety and openness:

UPDATE: 28 August 2016: We look at an EU research project that recently investigated the concepts of organisational safety intelligence (the safety information available) and executive safety wisdom (in using that to make safety decisions) by interviewing 16 senior industry executives: Safety Intelligence & Safety Wisdom. They defined these as:

Safety Intelligence the various sources of quantitative information an organisation may use to identify and assess various threats. Safety Wisdom the judgement and decision-making of those in senior positions who must decide what to do to remain safe, and how they also use quantitative and qualitative information to support those decisions.

The topic of weak or ambiguous signals was discussed in this 2006 article: Facing Ambiguous Threats. A paper by the Health and Safety Laboratory is worth attention: High Reliability Organisations [HROs] and Mindful Leadership and in a paper by Andrew Hopkins at the ANU.

UPDATE 16 February 2017: Aerossurance is delighted to be sponsoring an RAeS HFG:E conference at Cranfield University on 9 May 2017, on the topic of Staying Alert: Managing Fatigue in Maintenance. This event will feature presentations and interactive workshop sessions.

UPDATE 1 March 2017: Safety Performance Listening and Learning – AEROSPACE March 2017

Organisations need to be confident that they are hearing all the safety concerns and observations of their workforce. They also need the assurance that their safety decisions are being actioned. The RAeS Human Factors Group: Engineering (HFG:E) set out to find out a way to check if organisations are truly listening and learning.

The result was a self-reflective approach to find ways to stimulate improvement.

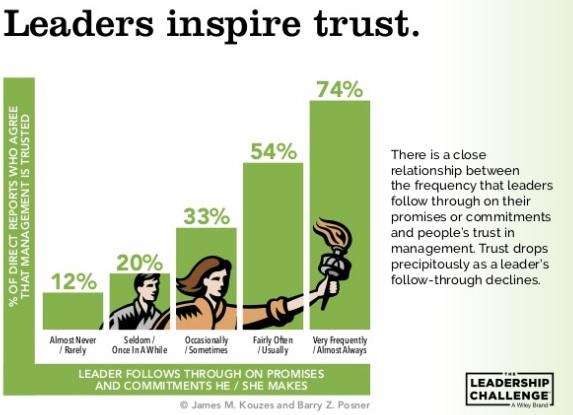

UPDATE 22 March 2017: Which difference do you want to make through leadership? (a presentation based on the work of Jim Kouzes and Barry Posner). Note slide 6 in particular:

UPDATE 25 March 2017: In a commentary on the NHS annual staff survey, trust is emphasised again:

Developing a culture where quality and improvement are central to an organisation’s strategy requires high levels of trust, and trust that issues can be raised and dealt with as an opportunity for improvement. There is no doubt that without this learning culture, with trust as a central behaviour, errors and incidents will only increase.

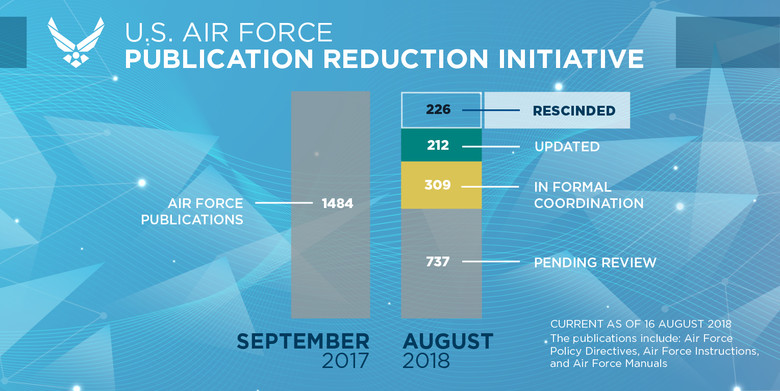

UPDATE 4 August 2017: The US Air Force plans to “significantly reduce unnecessary Air Force instructions over the next 24 months“.

Secretary of the Air Force Heather Wilson said that “the 1,300 official instructions are often outdated and inconsistent, breeding cynicism when Airmen feel they cannot possibly follow every written rule”.

The effort will start with the 40 percent of instructions that are out of date and those identified by Airmen as top priorities.

“The first step will target immediate rescission,” Wilson said. “We want to significantly reduce the number of publications, and make sure the remaining ones are current and relevant.”

The second phase will be a review of all other directive publications issued by Headquarters Air Force. These publications contain more than 130,000 compliance items at the wing level. Publications should add value, set policy and describe best practices, she said.

Wilson emphasises trust, trust in the judgement, experience and training of airmen, rather than prescribing everything.

Think about that. There are 130,000 ways a ‘culpability’ or ‘accountability’ decision aid’ could be used, counter-productively, to judge 320,000 service personnel and 140,000 civilians.

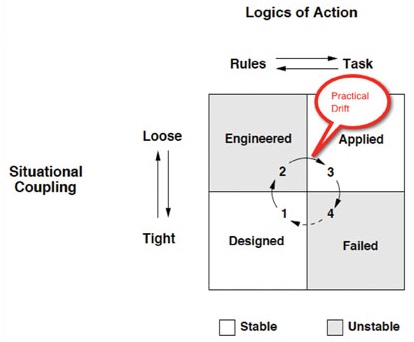

One wonders how many were created by a lack of trust or due to practical drift (a concept discussed in Friendly Fire: The Accidental Shootdown of U.S. Black Hawks over Northern Iraq). Despite self-serving nonsense pushed by some consultants who haven’t studied Snook (e.g. the type who put compliance at the heart of everything), this practical drift is not about drifting from procedures as designed, but the continual addition of bureaucracy making the system unworkable to the point a failure occurs.

UPDATE 4 December 2017: United Airways Suffers from ED (Error Dysfunction). The US airline shows a strange grasp of human factors principles and argues AGAINST a human centred design change intended to address a long running series of maintenance errors.

UPDATE 8 February 2018: The UK Rail Safety and Standards Board (RSSB) say: Future safety requires new approaches to people development They say that in the future rail system “there will be more complexity with more interlinked systems working together”:

…the role of many of our staff will change dramatically. The railway system of the future will require different skills from our workforce. There are likely to be fewer roles that require repetitive procedure following and more that require dynamic decision making, collaborating, working with data or providing a personalised service to customers. A seminal white paper on safety in air traffic control acknowledges the increasing difficulty of managing safety with rule compliance as the system complexity grows: ‘The consequences are that predictability is limited during both design and operation, and that it is impossible precisely to prescribe or even describe how work should be done.’

Since human performance cannot be completely prescribed, some degree of variability, flexibility or adaptivity is required for these future systems to work.

They recommend:

- Invest in manager skills to build a trusting relationship at all levels.

- Explore ‘work as done’ with an open mind.

- Shift focus of development activities onto ‘how to make things go right’ not just ‘how to avoid things going wrong’.

- Harness the power of ‘experts’ to help develop newly competent people within the context of normal work.

- Recognise that workers may know more about what it takes for the system to work safety and efficiently than your trainers, and managers.

UPDATE 12 February 2018: Safety blunders expose lab staff to potentially lethal diseases in UK. Tim Trevan, a former UN weapons inspector who now runs Chrome Biosafety and Biosecurity Consulting in Maryland, said safety breaches are often wrongly explained away as human error.

Blaming it on human error doesn’t help you learn, it doesn’t help you improve. You have to look deeper and ask: ‘what are the environmental or cultural issues that are driving these things?’

There is nearly always something obvious that can be done to improve safety. One way to address issues in the lab is you don’t wait for things to go wrong in a major way: you look at the near-misses. You actively scan your work on a daily or weekly basis for things that didn’t turn out as expected. If you do that, you get a better understanding of how things can go wrong.

Another approach is to ask people who are doing the work what is the most dangerous or difficult thing they do. Or what keeps them up at night. These are always good pointers to where, on a proactive basis, you should be addressing things that could go wrong.

UPDATE 13 February 2018: Considering human factors and developing systems-thinking behaviours to ensure patient safety

Medication errors are too frequently assigned as blame towards a single person. By considering these errors as a system-level failure, healthcare providers can take significant steps towards improving patient safety.

‘Systems thinking’ is a way of better understanding complex workplace issues; exploring relationships between system elements to inform efforts to improve; and realising that ‘cause and effect’ are not necessarily closely related in space or time.

This approach does not come naturally and is neither well-defined nor routinely practised…. When under stress, the human psyche often reduces complex reality to linear cause-and-effect chains.

Harm and safety are the results of complex systems, not single acts.

UPDATE 2 March 2018: An excellent initiative to create more Human Centred Design by use of a Human Hazard Analysis is described in Designing out human error

HeliOffshore, the global safety-focused organisation for the offshore helicopter industry, is exploring a fresh approach to reducing safety risk from aircraft maintenance. Recent trials with Airbus Helicopters and HeliOne show that this new direction has promise. The approach is based on an analysis of the aircraft design to identify where ‘error proofing’ features or other mitigations are most needed to support the maintenance engineer during critical maintenance tasks.

The trial identified the opportunity for some process improvements, and discussions facilitated by HeliOffshore are planned for early 2018.

UPDATE 11 April 2018: The Two Traits of the Best Problem-Solving Teams (emphasis added):

…groups that performed well treated mistakes with curiosity and shared responsibility for the outcomes. As a result people could express themselves, their thoughts and ideas without fear of social retribution. The environment they created through their interaction was one of psychological safety.

Without behaviors that create and maintain a level of psychological safety in a group, people do not fully contribute — and when they don’t, the power of cognitive diversity is left unrealized. Furthermore, anxiety rises and defensive behavior prevails.

We choose our behavior. We need to be more curious, inquiring, experimental and nurturing. We need to stop being hierarchical, directive, controlling, and conforming.

We believe this applies to all teams not just those solving problems. Retrospective management application of culpability decisions aids have no more a place when trying to solve problems than they do in other work activities.

UPDATE 29 August 2018: An update on the USAF Publication Reduction Initiative.

UPDATE 24 October 2022: The Royal Aeronautical Society (RAeS) has launched the Development of a Strategy to Enhance Human-Centred Design for Maintenance. Aerossurance‘s Andy Evans is pleased to have had the chance to participate in this initiative.

Aerossurance is pleased to be supporting the annual Chartered Institute of Ergonomics & Human Factors’ (CIEHF) Human Factors in Aviation Safety Conference for the third year running. This year the conference takes place 13 to 14 November 2017 at the Hilton London Gatwick Airport, UK with the theme: How do we improve human performance in today’s aviation business?

Aerossurance is pleased to be both sponsoring and presenting at a Royal Aeronautical Society (RAeS) Human Factors Group: Engineering seminar Maintenance Error: Are we learning? to be held on 9 May 2019 at Cranfield University.

Recent Comments